AI Chip Report

: Analysis on the Market, Trends, and TechnologiesThe AI chip sector is experiencing a transformative era, marked by rapid advancements and integration across various industries. With a total funding of $4.11 billion and an average annual employee growth of 9.86%, the sector demonstrates a healthy environment for innovation and business growth. Key players are leveraging AI chips to enhance processing speed and efficiency, driving forward applications in health, safety, automotive, manufacturing, and more. The industry’s growth trajectory is further underscored by significant investments from entities like Sinovation Ventures and Intel Capital, as well as a notable increase in media attention, indicating strong market interest and confidence in AI chip technologies.

This report was last updated 432 days ago. Spot an error or missing detail? Help us fix it by getting in touch!

Topic Dominance Index of AI Chip

To identify the Dominance Index of AI Chip in the Trend and Technology ecosystem, we look at 3 different time series: the timeline of published articles, founded companies, and global search.

Key Activities and Applications

- Health and Wellness: Companies are developing AI chips for applications such as intelligent wellness tools, bioelectric resonance for disease treatment, and health monitoring systems.

- Semiconductor and Electronics Manufacturing: Firms are engaged in the design and production of memory chips, microcontroller units (MCUs), and processors for high-performance computing (HPC).

- AI-Enhanced Consumer Products: Businesses are incorporating AI chips into consumer brands, enhancing user experience through smart devices and applications.

- Industrial Applications: AI chips are being utilized for applications in smart factories, robotics, and logistics, optimizing operations and supply chain management.

- Automotive and Mobility: Companies focus on autonomous driving chips and AI algorithms for vehicle intelligence and safety systems.

- Data and Cloud Services: Firms provide cloud-based solutions and data algorithms to support AI chip functionalities and user insights.

Emergent Trends and Core Insights

- Edge AI: A shift towards edge computing, where AI chips are embedded in devices to perform real-time processing without latency issues.

- Healthcare Innovation: AI chips are increasingly used in medical devices and health monitoring, emphasizing preventive care and chronic disease management.

- Sustainable Energy: The integration of AI chips in renewable energy systems, such as solar power, to enhance efficiency and output.

- Investment in R&D: Significant funding is directed towards research and development of AI chips, with collaborations between industry and academia.

Technologies and Methodologies

- In-Memory Computing: Adoption of in-memory compute capabilities to meet aggressive energy-efficiency and performance requirements.

- Neuromorphic Systems: Development of chips and systems inspired by the human brain's neural structure for faster and energy-efficient computing.

- AI Accelerators: Specialized processors that enable machine learning at the edge, reducing power consumption and increasing speed.

- Semiconductor IP: Companies are innovating in semiconductor intellectual property to support a wide range of AI applications.

AI Chip Funding

A total of 89 AI Chip companies have received funding.

Overall, AI Chip companies have raised $3.5B.

Companies within the AI Chip domain have secured capital from 282 funding rounds.

The chart shows the funding trendline of AI Chip companies over the last 5 years

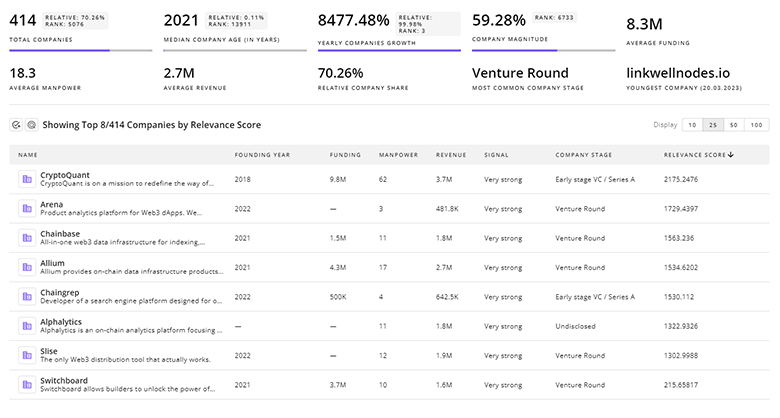

AI Chip Companies

Identify and analyze 291 innovators and key players in AI Chip more easily with this feature.

291 AI Chip Companies

Discover AI Chip Companies, their Funding, Manpower, Revenues, Stages, and much more

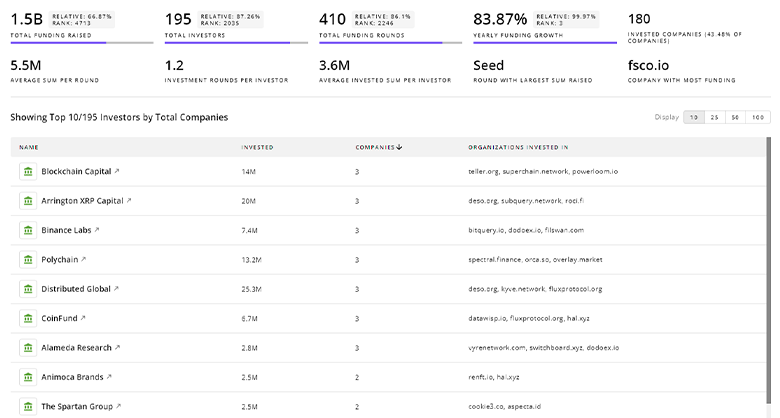

AI Chip Investors

TrendFeedr’s investors tool offers a detailed view of investment activities that align with specific trends and technologies. This tool features comprehensive data on 130 AI Chip investors, funding rounds, and investment trends, providing an overview of market dynamics.

130 AI Chip Investors

Discover AI Chip Investors, Funding Rounds, Invested Amounts, and Funding Growth

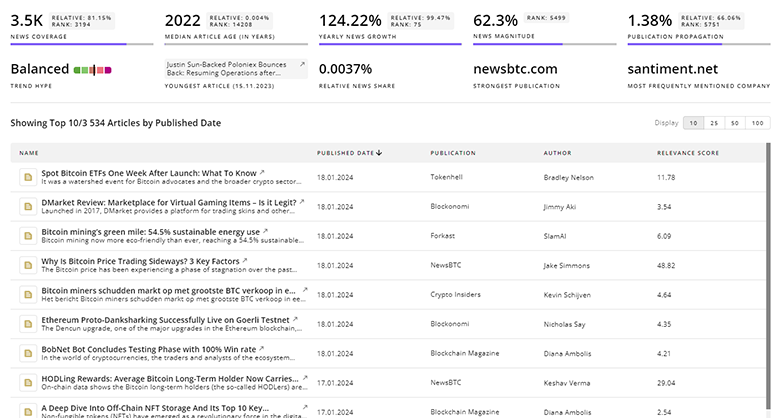

AI Chip News

Stay informed and ahead of the curve with TrendFeedr’s News feature, which provides access to 8.3K AI Chip articles. The tool is tailored for professionals seeking to understand the historical trajectory and current momentum of changing market trends.

8.3K AI Chip News Articles

Discover Latest AI Chip Articles, News Magnitude, Publication Propagation, Yearly Growth, and Strongest Publications

Executive Summary

The AI chip industry stands at the forefront of technological innovation, catalyzing advancements that permeate various sectors, from healthcare to automotive. The sector's robust funding, coupled with a steady increase in skilled workforce and media attention, signifies a fertile ground for future growth. Strategic investments and a focus on R&D are propelling the development of cutting-edge AI chips that promise to redefine efficiency and performance standards across the board. As companies continue to harness the power of AI chips, we can anticipate a new wave of intelligent applications transforming the way we live and work.

Interested in enhancing our coverage of trends and tech? We value insights from experts like you - reach out!