Data Storage Report

: Analysis on the Market, Trends, and TechnologiesThe data storage market sits at $186,750,000,000 (2023) and is projected to reach $774,000,000,000 by 2032, implying a 17.1% compound annual growth rate — a scale that forces firms to choose between ultra-low-latency AI serving stacks and centuries-grade archival media.

We updated this report 26 days ago. Noticed something’s off? Let’s make it right together — reach out!

Topic Dominance Index of Data Storage

The Topic Dominance Index trendline combines the share of voice distributions of Data Storage from 3 data sources: published articles, founded companies, and global search

Key Activities and Applications

- AI/HPC data serving: Engineering storage platforms to sustain continuous GPU utilization is now a primary activity; systems prioritize latency and bandwidth over classical aggregate IOPS, and vendors build software-defined arrays tailored to AI pipelines to reduce expensive compute idle time.

- Long-term archival preservation: Enterprises and cultural institutions are investing in non-magnetic media (optical, ceramic, DNA) to avoid repeated large-scale migrations and to preserve data for decades to centuries, shifting procurement from refresh cycles to media permanence strategies.

- Unstructured data governance and cost control: Visibility and lifecycle rules for unstructured datasets (SMB/NFS/object) drive activity in discovery, orphan-data identification, and policy-driven archiving to cut storage TCO and compliance exposure

- Hybrid and multi-cloud orchestration: Movement of workloads across on-prem, edge, and multiple clouds to meet latency, sovereignty, and economics requirements is now core operational work for I&O teams.

- Ransomware resilience and cryptographic durability: Implementing network-coding, immutable object histories, and cryptographic splitting to enable rapid recovery and minimize recovery cost is an emerging operational imperative.

- Edge and modular deployment for low latency: Placing storage close to inference/ingest points (edge nodes, modular pods) is increasingly standard for real-time analytics and IoT aggregation.

Emergent Trends and Core Insights

- Architectural bifurcation: The market is fracturing into two high-conviction plays: high-velocity AI/HPC storage and ultra-long-term archival media, with mid-market generalists experiencing margin compression as capital concentrates around winners.

- Policy-driven, metadata-centric management: Systems that treat metadata and policies as primary drivers of placement and lifecycle decisions deliver efficiency gains measured in tens of percentage points; patents and vendor roadmaps emphasize metadata separation and attribute-based tiering.

- NVMe proliferation and NVMe-over-TCP acceleration: NVMe and NVMe-oF are the chosen path for low-latency block access; NVMe/TCP implementations and controller innovations reduce host bottlenecks for AI training and inference workloads.

- Data governance for trustworthy AI: Storage solutions embed lineage, versioning, and immutable object creation to support auditable model training and inference, responding to regulatory and quality demands identified by AI teams.

- Emerging media race (DNA, ceramic, optical): DNA and ceramic media are moving from lab prototypes to pilotable racks; investment flows signal that organizations with large cold archives will evaluate non-electronic media as a capex/opex alternative to tape refresh cycles.

- Sustainability influences procurement: Energy consumption and renewable integration now appear in procurement criteria; vendors offering liquid cooling, N2 power optimizations, or higher density per kW gain leverage in RFPs.

- Decentralized storage for verifiability and cost arbitrage: Layer-2 and blockchain-backed storage options position themselves as verifiable, cost-effective alternatives for specific archival and regulatory use cases, particularly when data provenance matters DeStor.

Technologies and Methodologies

- Software-Defined Storage (SDS) platforms: SDS continues to be the dominant enabler for hardware abstraction, unified management, and lifecycle automation across hybrid estates.

- NVMe and NVMe-oF ecosystems: Adoption of NVMe/TCP and NVMe-over-Fabric reduces end-to-end latency for AI training datasets and high-concurrency OLTP/OLAP workloads.

- Computational and in-situ storage: Offloading lightweight data transforms and pre-processing to storage nodes (computational storage) reduces network movement and accelerates analytics on-device.

- Intelligent tiering and ML-driven DLM: Automated policies using telemetry and predictive models move data between flash, disk, cloud, and tape/DNA tiers, optimizing cost against access SLAs.

- Network coding and cryptographic splitting: These methods reduce repair bandwidth, accelerate reconstruction, and harden archives against targeted corruption while improving usable capacity.

- DNA and ceramic media write/read workflows: New synthesis and sequencing tooling, combined with specialized indexing and retrieval layers, create workable archival workflows that prioritize density and longevity over latency researchandmarkets - DNA Data Storage.

- Object storage as canonical substrate for unstructured data: Object stores (S3-compatible) provide scalable metadata, immutability, and policy hooks; they underpin data lakes, analytic pipelines, and long-term retention MIT Technology Review - Object Storage.

- Modular, prefabricated data center design: Rapid capacity deployment at the edge and regional hubs uses modular racks and offsite manufacturing to align capacity with latency and regulatory constraints.

Data Storage Funding

A total of 6.5K Data Storage companies have received funding.

Overall, Data Storage companies have raised $1.1T.

Companies within the Data Storage domain have secured capital from 22.0K funding rounds.

The chart shows the funding trendline of Data Storage companies over the last 5 years

Data Storage Companies

- PEAK:AIO — PEAK:AIO builds software-defined storage designed exclusively for AI workloads, prioritizing ultra-low latency and high bandwidth to reduce GPU idle time and shift budget from storage silos to compute. The company packages its platform as AI Data Servers integrated with mainstream server vendors to simplify deployment and maximize GPU utilization. Their approach is attractive to teams that measure storage ROI by effective GPU hours rather than raw TB costs.

- Cerabyte — Cerabyte prototypes Ceramic-on-Glass media and laser-matrix writing plus CMOS reading to deliver a permanent, low-power archival rack architecture aimed at exabyte to yottabyte horizons. The company emphasizes media longevity and sustainability to avoid the continual migration costs that burden large archives, positioning ceramic racks as an enterprise archival alternative. Their TRL6 prototype targets organizations needing multi-decadal retention without repeated refresh cycles.

- Biomemory — Biomemory develops DNA-based, rackable appliances targeted at enterprise archival customers and governmental archives, coupling molecular engineering with data-center form factors to achieve high density and low maintenance. The firm also markets DNA anti-counterfeiting products; its dual commercial/research focus helps fund incremental improvements in synthesis/read pipelines. With venture funding and a Paris-based R&D footprint, they aim to commercialize DNA as a practical archive tier for regulated sectors.

- Nexodata Inc — Nexodata Inc offers a software layer employing network coding and cryptographic splitting to increase storage efficiency and to harden backups against ransomware; their solution claims a 25% storage efficiency improvement and faster repair times for distributed arrays. The product targets data centers and hybrid clouds as an overlay that can be deployed without forklift upgrades, enabling measurable TCO and ESG benefits through lower capacity needs. Their patented approach positions them as a defensible specialist for resilience-first procurement decisions.

- Leil Storage — Leil Storage focuses on hyperscaler-grade efficiency for on-prem environments by combining advanced HDD techniques and host-managed SMR variants to optimize TB per kW and reduce migration pain. The company targets customers that require data sovereignty and lower TCO at petabyte scale, promising incremental cost improvements as capacity scales from zero to many petabytes. Their value proposition centers on providing hyperscale economics without mandatory cloud migration, appealing to regulated industries with large cold datasets.

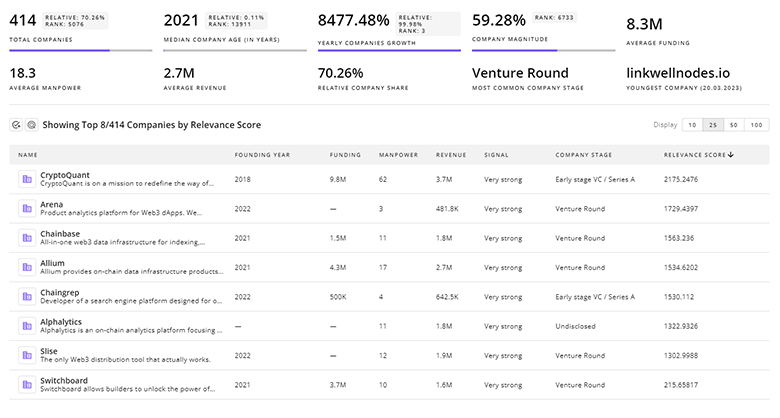

Gain a better understanding of 74.1K companies that drive Data Storage, how mature and well-funded these companies are.

74.1K Data Storage Companies

Discover Data Storage Companies, their Funding, Manpower, Revenues, Stages, and much more

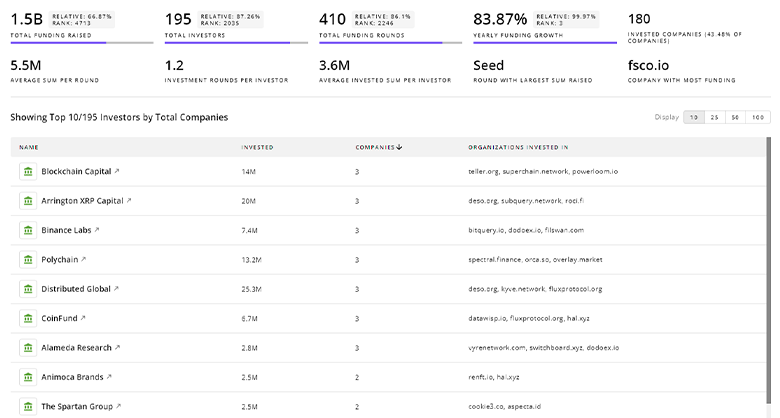

Data Storage Investors

Gain insights into 18.8K Data Storage investors and investment deals. TrendFeedr’s investors tool presents an overview of investment trends and activities, helping create better investment strategies and partnerships.

18.8K Data Storage Investors

Discover Data Storage Investors, Funding Rounds, Invested Amounts, and Funding Growth

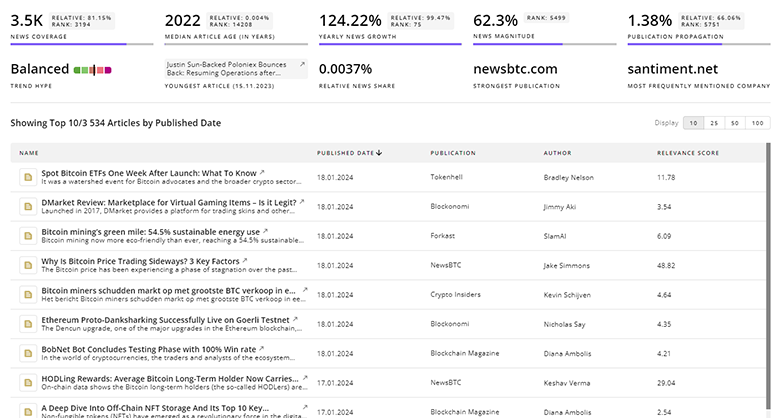

Data Storage News

Gain a competitive advantage with access to 96.4K Data Storage articles with TrendFeedr's News feature. The tool offers an extensive database of articles covering recent trends and past events in Data Storage. This enables innovators and market leaders to make well-informed fact-based decisions.

96.4K Data Storage News Articles

Discover Latest Data Storage Articles, News Magnitude, Publication Propagation, Yearly Growth, and Strongest Publications

Executive Summary

The data storage landscape now requires binary strategic decisions: invest in ultra-low-latency, controller- and NVMe-centric platforms that convert storage into a force multiplier for expensive compute, or commit to non-electronic archival media that removes perpetual migration risk. Near term, winning propositions combine software-defined control planes, ML-driven lifecycle automation, and energy-efficient infrastructure; long term, organizations with very large cold archives will evaluate ceramic and DNA options to contain migration and operational expense. For buyers, procurement must shift from pure TB/unit comparisons to composite metrics that include GPU utilization impact, lineage and audit capabilities, and lifecycle energy cost. Firms that standardize metadata and policy fabrics will capture most of the efficiency gains, while those that continue to treat storage as passive capacity will face rising operational costs and strategic exposure.

We seek partnerships with industry experts to deliver actionable insights into trends and tech. Interested? Let us know!